OpenAI’s Product Lead Reveals the 4-Part Framework for AI Product Strategy

Build AI products that scale profitably, retain users, and defend against commoditization

By Miqdad Jaffer, Product Lead at OpenAI:

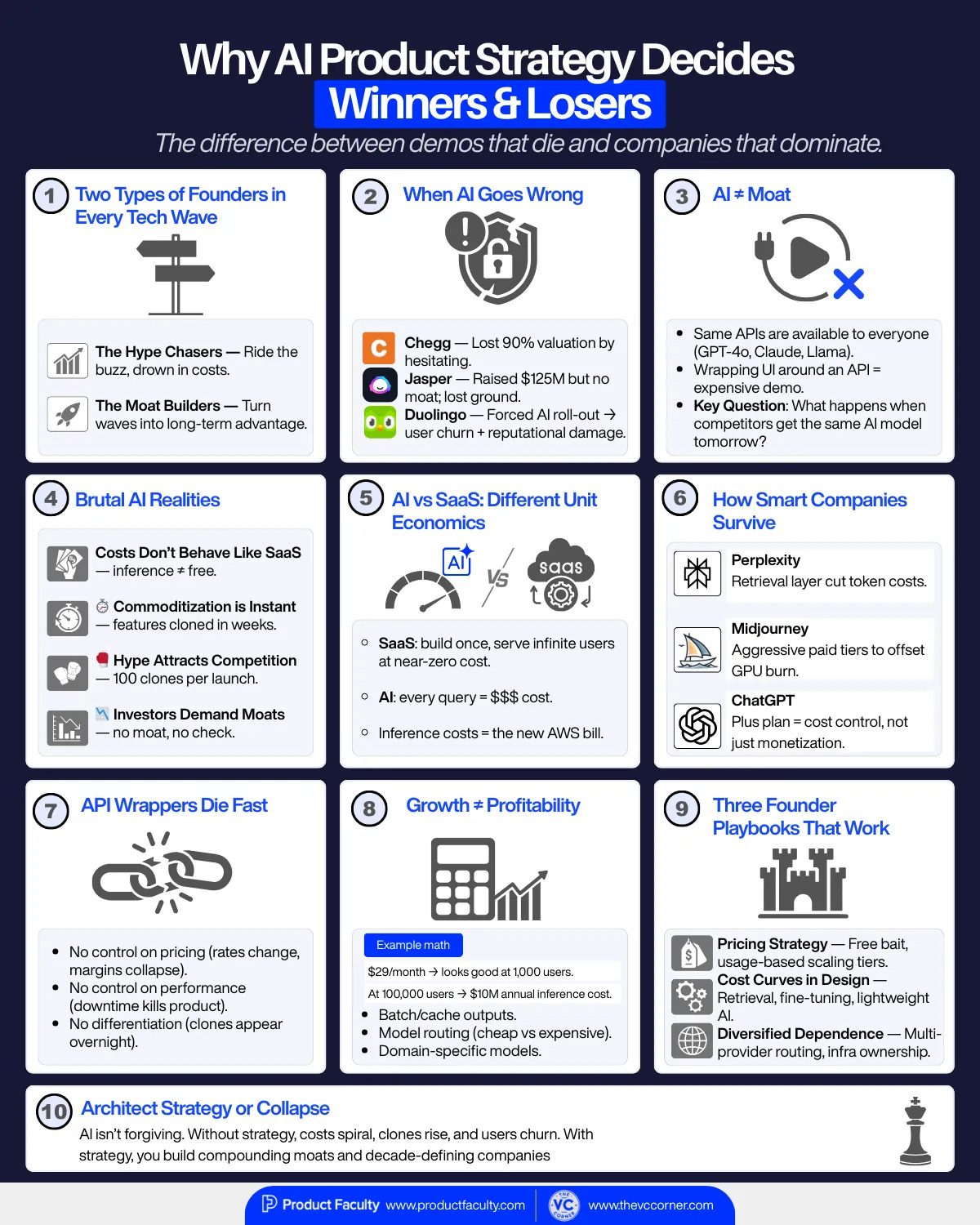

In every wave of technology, there are two types of founders:

Those who ride the hype and get crushed under their own costs.

Those who turn the wave into a moat and dominate a market for a decade.

AI is no different… except the stakes are higher. Because unlike SaaS or mobile, AI doesn’t forgive bad strategies.

Chegg lost 90% of their valuation because they failed to act on AI quickly enough. While students flocked to ChatGPT for instant, personalized help, Chegg hesitated, reacted late, and the market punished them brutally.

Jasper, once the golden child of AI writing, raised $125M at a $1.2B valuation and became the poster company for “AI wrappers.” But without a real moat, and with SaaS-style pricing that didn’t align with their soaring inference costs, they quickly lost ground. As ChatGPT gained adoption, users churned, prices had to be slashed, and Jasper is no longer the category favourite.

Duolingo, instead of delighting users with thoughtful AI integration, pushed out AI tutors and fired their staff but that felt forced and extractive. The result was devastating: reputational damage, hundreds of thousands of users churning, and 300,000 followers lost in a matter of weeks.

And these aren’t isolated missteps.

There are countless examples of companies bolting AI on as an afterthought, shipping gimmicky features without thinking about economics, or simply waiting too long to act… only to find that the market doesn’t give second chances.

That’s why in my 6-week AI Product Strategy cohort (and by the way, apart from the live sessions, you’ll also get a written review of your own AI Product Strategy + $550 off), we’ll be talking about how every one of these companies thought they could wait it out or ship later.

But in AI, time is compressed.

The adoption window is measured in quarters, not years.

Commoditization happens in weeks, not months.

Investors, users, and the market punish hesitation brutally.

So, without further ado, let’s dive straight into AI Product Strategy 101 for Founders - everything you need to know to not just survive this wave, but own it.

The Illusion of “Just Add AI”

Right now, every pitch deck has “AI-powered” slapped on the first slide. Founders think it gives them credibility. Investors nod. Customers get curious. But here’s the catch:

AI itself isn’t the moat. Everyone can access GPT-4o, Claude, Llama, Mistral. The barrier to entry is zero. If your strategy is “use OpenAI’s API and wrap a UI around it,” you don’t have a company, you have an expensive demo that can be cloned overnight.

What separates winners from losers is whether you can answer this question:

What happens when your competitors get access to the exact same AI model tomorrow?

If your answer is “we’ll build faster,” you’ve already lost.

Why AI Breaks Founders Without Strategy

Here’s what makes AI brutal:

Costs Don’t Behave Like SaaS: In SaaS, once you build the product, marginal costs per user trend toward zero. In AI, every query, every generation, every inference has a real cost attached — tokens, GPUs, hosting. Without strategy, costs scale faster than revenue.

Commoditization Happens Overnight: In SaaS, features might take years to copy. In AI, they’re cloned in weeks. The only defense is strategic moats: proprietary data, trust, or distribution.

Hype Attracts Competition: Every new AI feature gets 100 clones on Product Hunt. Most vanish. But some take your market if you don’t defend it with strategy.

Investors Are Smarter Now: In 2021, “AI” on a deck raised millions. In 2025, VCs ask: What’s your moat when GPT-5 launches? How do you survive inference costs at 100M queries a month? If you don’t have answers, the check doesn’t come.

AI is not about building the flashiest demo.

It’s about designing the system around the AI:

How will you monetize it profitably when usage scales 10x?

How will you retain customers when the underlying models get better and cheaper every month?

How will you turn your distribution into a compounding advantage?

How will you build trust in an environment where hallucinations and privacy issues erode confidence?

That’s the difference between being AI companies that will die and the ones who’ll rule the future.

The winners will be the founders who don’t just “add AI,” but architect it into a product strategy that scales, defends, and compounds.

And here’s the truth: the gap between winners and losers in AI will open faster than any prior wave in tech.

Because when costs spiral, you don’t get years to fix it: you get months.

When commoditization hits, you don’t have quarters to react: you have weeks.

That’s why AI product strategy isn’t a “nice to have.”

It’s the only thing standing between hypergrowth and collapse.

AI Economics: The New Unit Economics of Startups

In SaaS, the playbook was simple:

Spend once to build the product.

Acquire a user.

Marginal cost to serve them = near zero.

Profits scale with every new customer.

That’s why SaaS margins hover around 70–80%. It’s why SaaS created billion-dollar giants off $29/month subscriptions.

But AI doesn’t play by SaaS rules. In AI, marginal costs are stubbornly real.

Why Marginal Costs Behave Differently in AI vs SaaS

Every AI query has a price tag attached.

A single ChatGPT query costs OpenAI fractions of a cent to several cents depending on the model.

Run that across millions of users, and suddenly your “free tier” burns millions a month.

In SaaS, scale lowers costs. In AI, scale can increase costs unless you’ve built efficiency into your product design.

Here’s the brutal truth: Inference costs are the new AWS bill. And just like early startups got destroyed by runaway cloud costs, AI startups today are bleeding from token bills they can’t control.

Case Study: Perplexity vs Midjourney vs ChatGPT

Perplexity understood the math early. Instead of running raw GPT calls for every query, they built a hybrid retrieval layer + LLM. By pulling relevant docs first, then summarizing, they cut token usage dramatically. Lower costs, faster responses, and more citations = better UX.

Midjourney built community-driven virality on Discord. But the hidden story? GPU costs were astronomical. Every image rendered = compute burned. That’s why they pushed aggressive paid tiers quickly — because free users were unsustainable.

ChatGPT exploded with adoption (100M users in 2 months), but it nearly broke OpenAI’s compute budget. That’s why “ChatGPT Plus” launched at $20/month. Not just a monetization play, but a cost-containment move.

The pattern is clear: founders who survive long enough to scale do so because they design unit economics upfront.

The Hidden Trap of Token Costs & API Reliance

Most early AI startups are API wrappers. They rely 100% on OpenAI, Anthropic, or another foundation model. That’s fine for a prototype. Deadly for a company.

Why?

You don’t control pricing. OpenAI raises API rates tomorrow? Your margins collapse.

You don’t control performance. Model latency or downtime? Your product breaks.

You don’t control differentiation. If the same API is available to everyone, what stops the next founder from copying your entire product in a weekend?

This is why API-first AI products die fast. They mistake building a demo for building a company.

How to Model Costs When Usage Scales 10x

Let’s run a simple thought experiment:

Suppose you charge $29/month per user.

Your average user makes 500 queries/month.

Each query costs you $0.002 in tokens.

That’s $1.00 in raw inference cost per user/month.

Gross margin = ~97%. Beautiful.

Now scale:

You grow from 1,000 users → 100,000 users.

Queries balloon from 500,000 → 50 million/month.

Costs = $100K/month → $10M/year in inference.

Suddenly your AWS bill looks tiny in comparison.

This is the trap. Margins look fine at 1,000 users. They crumble at 100,000 unless you:

Batch or cache intelligently. (Don’t re-generate the same outputs 50 times.)

Use model routing. (Run cheap models for simple tasks, expensive ones only when needed.)

Build proprietary infra. (Training small domain-specific models that are cheaper to run.)

The Real Math Behind AI Profitability

Let’s be blunt: most AI startups right now aren’t profitable, even if they look like they’re growing. They’re subsidizing user adoption with VC dollars while ignoring the economics.

The ones that win are doing three things differently:

Pricing Strategically.

Free tier = bait.

Paid tiers kick in fast, with usage-based pricing that scales with costs.

Example: Midjourney cutting off “free” generations because the math broke.

Building Cost Curves Into Design.

Perplexity’s retrieval step is a cost moat.

Grammarly’s incremental fine-tuning makes corrections cheaper over time.

Canva’s AI tools are lightweight enhancements, not cost-draining centerpieces.

Diversifying Dependence.

Routing across multiple providers (OpenAI, Anthropic, Cohere, Mistral).

Training domain-specific models where possible.

Owning infrastructure when scale demands it.

If you build AI without modeling your unit economics:

You will mistake growth for success. You will bleed money the faster you scale. You will wake up one day with negative margins and no investor patience. But if you design your economics into the product from Day 1, you flip the script:

Your costs drop as usage grows (because caching, routing, infra efficiencies).

Your competitors can’t undercut you (because your economics are structurally better).

Your growth compounds into a real moat, not just hype.

That’s the difference between being a demo and being a decade-defining company.

The 4D Framework for AI Product Strategy:

Keep reading with a 7-day free trial

Subscribe to The VC Corner to keep reading this post and get 7 days of free access to the full post archives.