Dario Amodei Says AGI Is 1-3 Years Away. Here's His Full Breakdown.

The CEO of Anthropic just laid out the most detailed AI timeline any lab leader has ever given. Most people aren't paying attention.

“The most surprising thing has been the lack of public recognition of how close we are to the end of the exponential. It is absolutely wild that you have people talking about the same tired old hot button political issues. And around us, we’re near the end of the exponential.” — Dario Amodei

Dario runs Anthropic. One of three companies building the most powerful AI models on Earth.

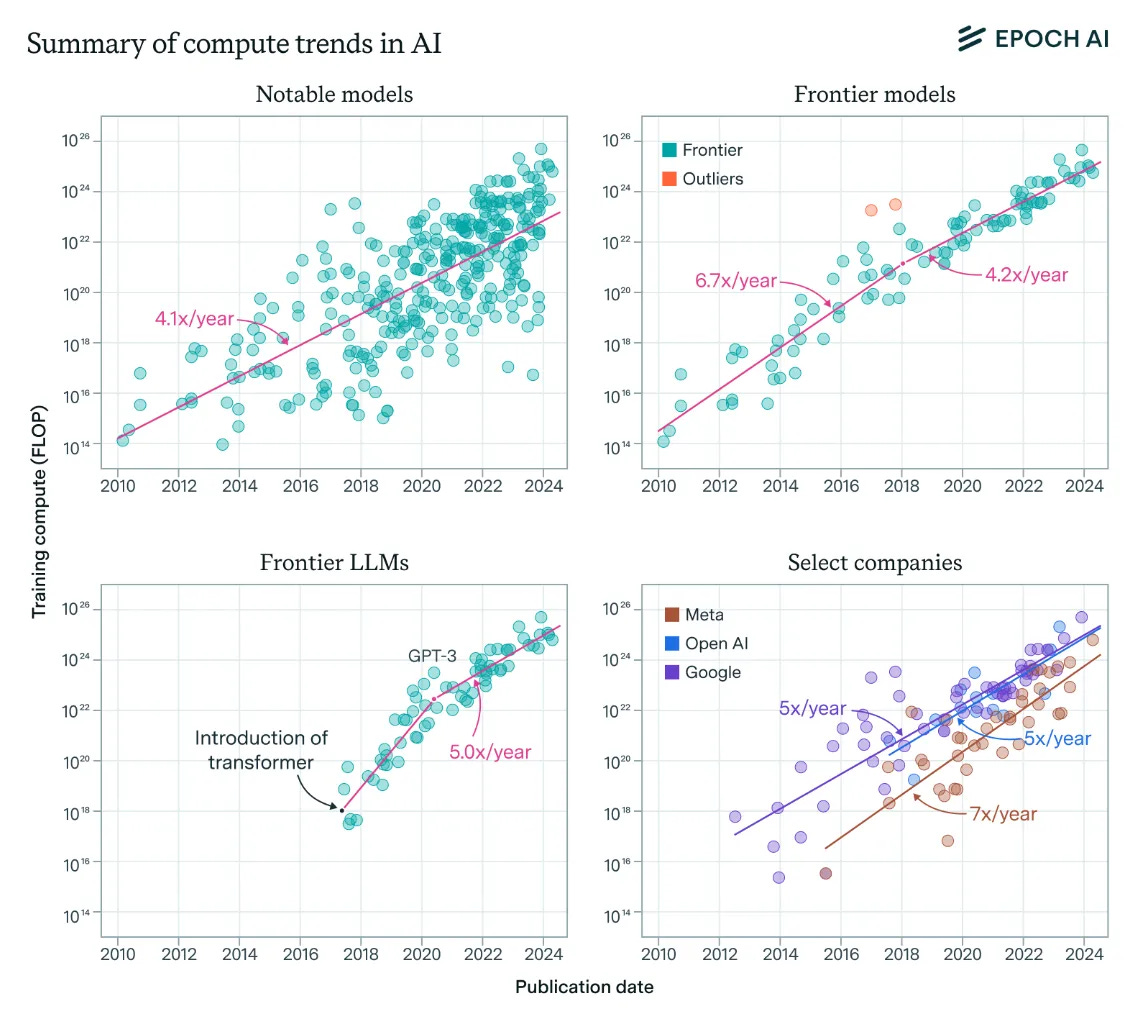

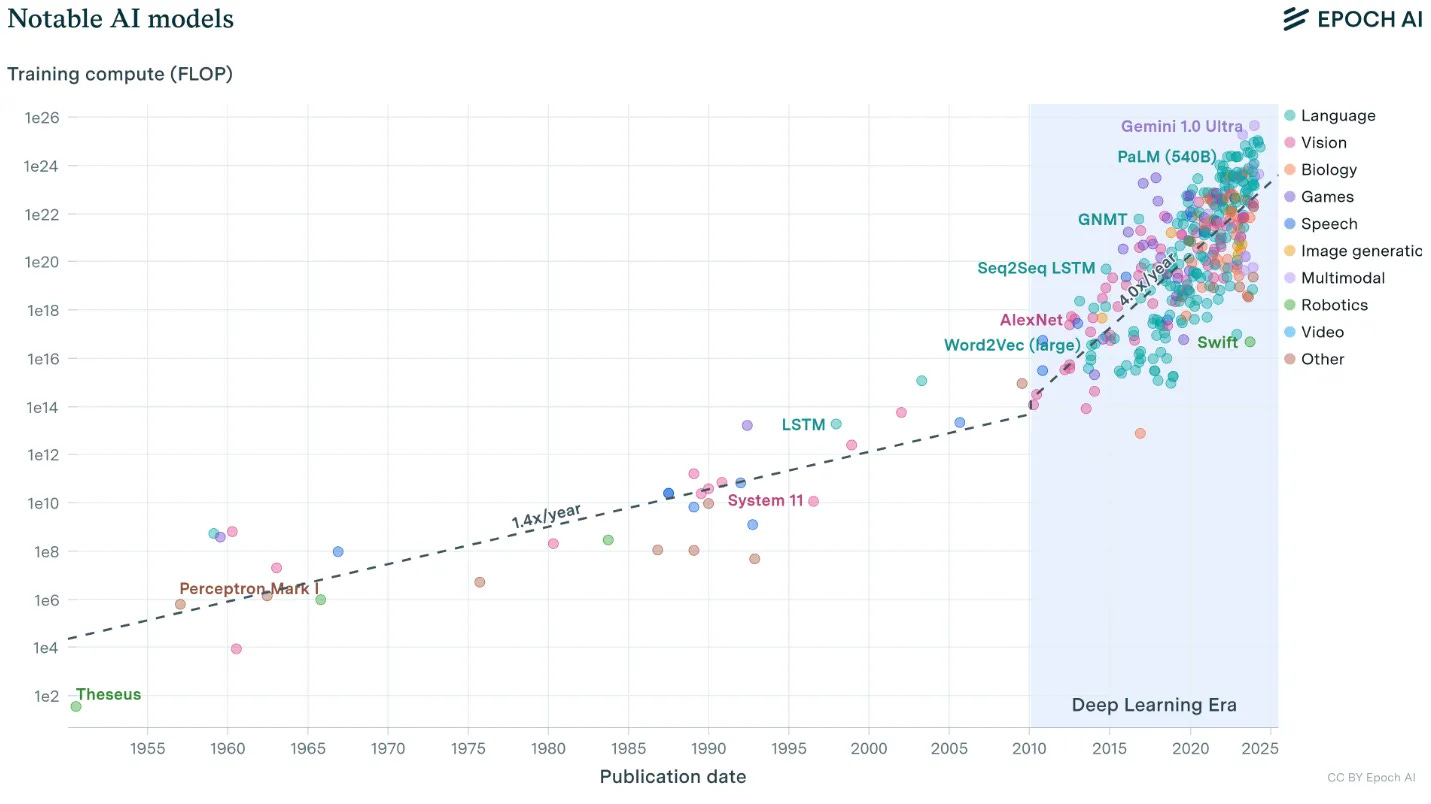

For 23 years, we’ve been riding an exponential in compute and capabilities. That curve is approaching something. Most people have no idea how close we are.

I watched the full 3-hour podcast so you don’t have to.

Here are the 10 biggest takeaways.

1. AGI in 1-3 Years (He Put Numbers on It)

Dario doesn’t hedge anymore.

“Within 10 years, we’ll get to country of geniuses in the data center. I’m at 90% on that. With coding, I think we’ll be there in one or two years.”

Here’s the framework:

Verifiable tasks have objective answers. Code compiles or it doesn’t. A math proof checks out or it doesn’t. These get solved first.

Non-verifiable tasks are trickier. How do you verify a novel is great before readers judge it? How do you confirm a strategy is correct before it plays out?

Dario is 90% confident the full package arrives within a decade.

His real hunch? One to three years for most knowledge work.

That’s not a prediction from a blogger. That’s the CEO of the company building Claude. And if you’ve used Claude Opus 4.6, you can already feel how close we are.

2. Software Engineering Gets Fully Automated First

This is the one that should keep every founder and CTO up at night.

“In a year or two, models can do software engineering end-to-end. Setting technical direction, understanding the context of the problem. Yes, I mean all of that.”

Not just writing code. The entire job:

Design documents

Technical specifications

Architecture decisions

Full implementation

Why software first? Because software is verifiable. Code works or it doesn’t. That verification signal lets you train models at massive scale.

Software also represents millions of jobs. Hundreds of billions in wages. Trillions in economic value.

One to two years. End to end.

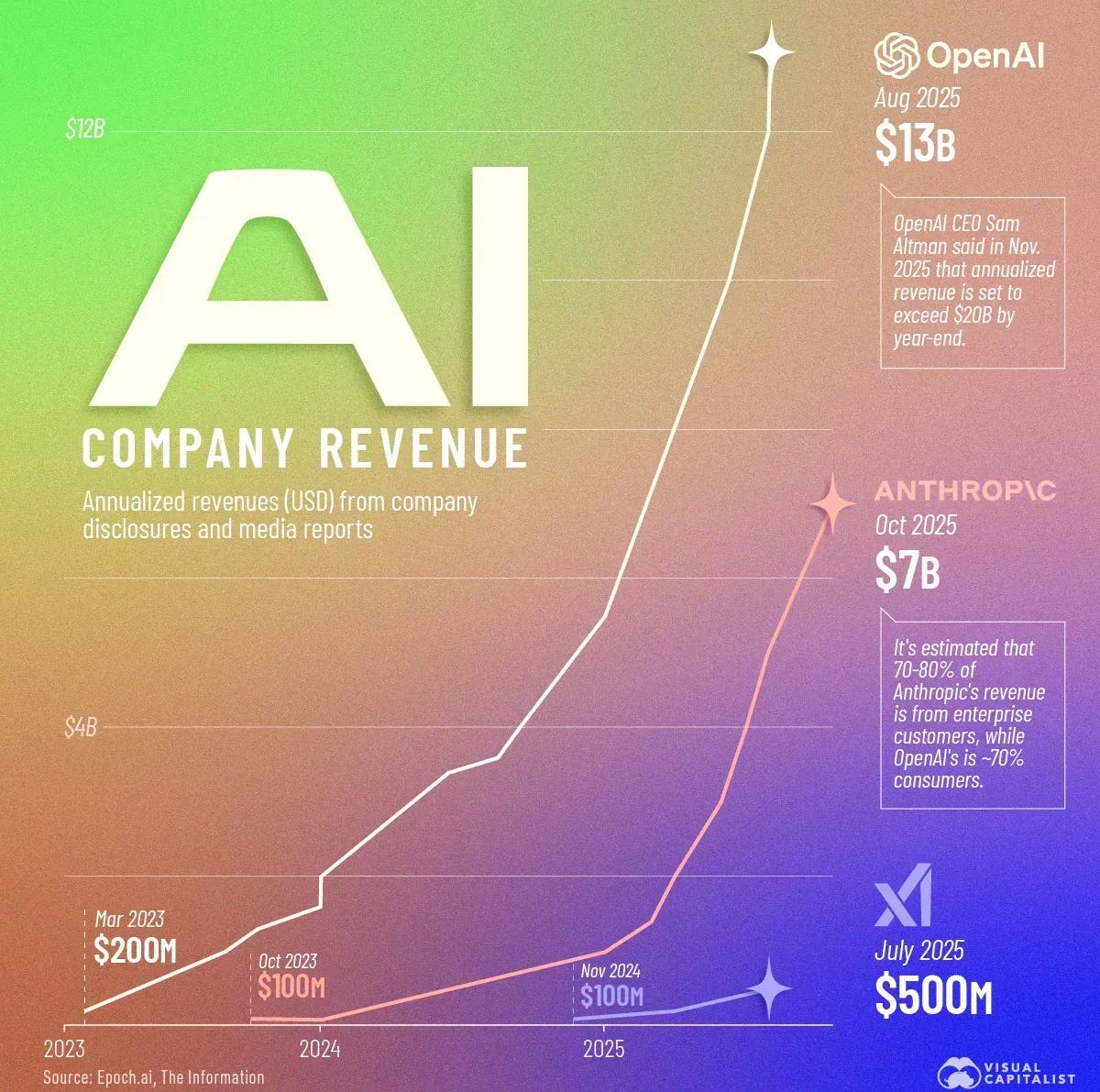

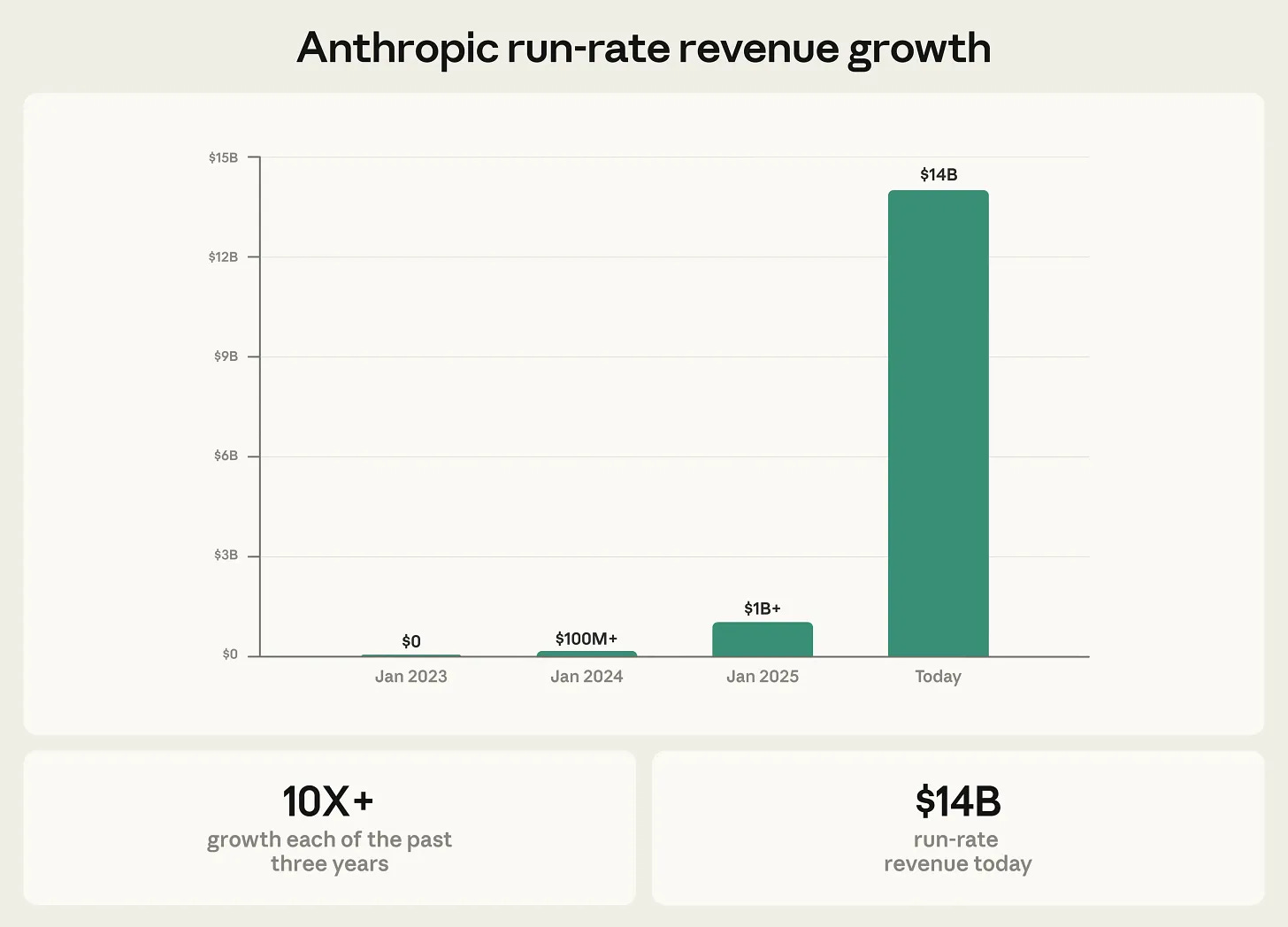

3. AI Revenue Hits Trillions by 2030

The economics are staggering.

“It is hard for me to see that there won’t be trillions of dollars in revenue before 2030. In 2028, we get the real country of geniuses. The revenue has been going into the low hundreds of billions by 2028. Then it accelerates to trillions.”

Anthropic alone proves the trajectory:

10x growth per year. Three years running.

By 2028, the full system arrives. Revenue already in hundreds of billions. Then every domain where intelligence is the constraint unlocks at once:

Medicine

Biology

Materials science

Energy

Trillions by 2030 stops sounding crazy when you see the math.

4. There Are Two Exponentials, Not One

This is the insight most people miss.

“There’s one fast exponential that’s the capability of the model. Then there’s another fast exponential downstream of that, which is the diffusion into the economy.”

First curve: Technical capability. GPT-3 to GPT-4 to Claude. Moves at research speed.

Second curve: Economic adoption. Production deployment. Workflow changes. Employee training. Moves at institutional speed.

Both are fast. Neither is instant. And there’s a gap between them.

That gap is where the biggest opportunities live right now. Tools like Claude’s Cowork system are already bridging that gap for early adopters.

“That curve can’t go on forever. The GDP is only so large.”

But between now and that ceiling? Massive value creation.

5. The "Big Blob" Hypothesis Still Holds After 7 Years

In 2017, before GPT-1 even existed, Dario wrote “The Big Blob of Compute Hypothesis.”

The core idea:

“All the cleverness, all the techniques, all the ‘we need a new method’ doesn’t matter very much. Only a few things matter: raw compute, quantity of data, quality of data, training time, objective functions.”

Seven years later? Still holds.

“What’s changed is we’re now seeing the same scaling in RL that we saw for pre-training. With RL, it’s actually just the same.”

People worried that something fundamental changed. That scaling laws broke. That we hit a wall.

Dario says no. Same dynamics. Same log-linear returns. Just applied to a new training phase.

This is why he has conviction on timelines. The curve hasn’t slowed down.

6. Every "Barrier" to AI Keeps Dissolving

Skeptics keep pointing to things AI “can’t do.” Dario keeps watching those barriers evaporate.

“People keep coming up with barriers that end up dissolving within the big blob of compute. People talked about semantics, reasoning, code and math. Suddenly it turns out you can do all of it.”

The graveyard of “AI can’t do this”:

Semantics✓ DissolvedReasoning✓ DissolvedCode and math✓ DissolvedContinual learning → Dissolving now

“I think continual learning might not be a barrier at all. We maybe just get there by pre-training generalization and RL generalization.”

What looks like a fundamental limitation keeps turning into an engineering problem. Or it turns out it never existed in the first place.

One barrier people assumed would hold forever: “AI can’t write like a human.” That’s dissolving too. The right prompting approach already closes most of that gap.

A million-token context window is already days or weeks of human reading. Models might not need continual learning the way humans do.

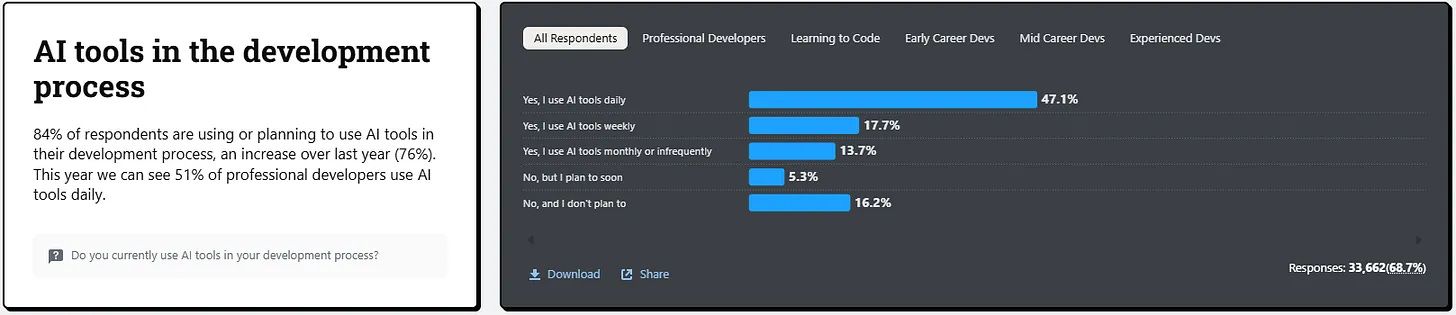

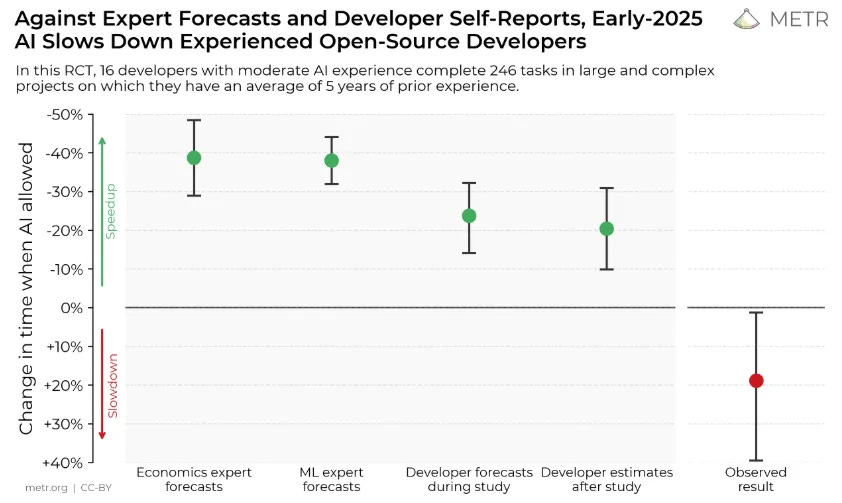

7. AI Productivity Gains Are Accelerating Faster Than Expected

Software engineering is the canary in the coal mine. And the canary is screaming.

“Right now, coding models give maybe 15, maybe 20% total factor speedup. Six months ago, it was maybe 5%. It’s now getting to the point where it’s one of several factors that matters.”

The trajectory tells the story:

Six months ago: 5% speedup

Today: 15-20% speedup

Six months from now: 40%+ speedup

5% doesn’t register. 20% starts to matter. 50% changes everything.

But Dario warns against simplistic thinking:

“There’s a huge spectrum. 90% of lines written is very different from 100% of lines. That’s different from 90% of end-to-end tasks.”

Writing 90% of the code means nothing if the job is only 30% code. The job includes design, debugging, documentation, communication, leadership.

Still, momentum is building. Anthropic already has engineers who write zero code.

“This GPU kernel, I used to write it myself. I just have Claude do it.”

And it’s not just code. People are automating entire workflows with Claude Cowork, from SEO to data analysis to content ops. That shift is spreading fast.

8. He Spends 40% of His Time on Culture (Here's Why)

When everything moves this fast, culture becomes the bottleneck.

“I probably spend a third, maybe 40% of my time making sure the culture of Anthropic is good. Everyone thinks of themselves as team members. Everyone works together instead of against each other.”

At 2,500 employees, maintaining coherence is hard. Other AI labs are already showing cracks.

“We’ve seen as some of the other companies have grown, we’re starting to see decoherence and people fighting each other.”

Dario’s method: radical transparency.

Every two weeks he sends “Dario Vision Quest”:

3-4 pages

Covers models, products, industry, geopolitics

No corporate speak

Straight talk

“That direct connection has a lot of value that is hard to achieve when you’re passing things down six levels deep.”

Culture isn’t a nice-to-have at this speed. It’s the thing that lets you move fast without breaking apart.

9. He Makes Consequential Decisions in Two Minutes

This one is humbling for anyone who thinks leadership means careful deliberation.

“Some critical decision will be someone just comes into my office and is like, Dario, you have two minutes. Should we do thing A or thing B? Someone gives me this random half-page memo. I’m like, I don’t know, I have to eat lunch, let’s do B. That ends up being the most consequential thing ever.”

Building at this speed means:

You make 30 decisions a day

You don’t know which ones matter

You don’t have time to analyze most of them

You rely on intuition, judgment, and the culture you built

Some decisions get two weeks of analysis. Some get two minutes over lunch.

You won’t know which category a decision belonged to until years later.

This is what operating at the frontier actually looks like.

10. We're Near the End of the Exponential (and the Beginning of Another)

Dario keeps returning to this phrase.

“Near the end of the exponential.”

He means the capability curve. The one that’s been running for 23 years. The one that took us from nothing to systems that pass the bar exam, write code, and reason through advanced mathematics.

That curve is approaching human-level intelligence across most domains. 1-3 years for verifiable tasks. 10 years for everything else.

“We would know it if you had the country of geniuses in a data center. Everyone in this room would know it. Everyone in Washington would know it. We don’t have that now. That’s very clear.”

But we’re close. Closer than almost anyone outside the labs realizes.

Near the end of one curve means near the beginning of another. The economic curve. Measured in trillions. Industries transformed. Human capability augmented at a scale we’ve never seen.

The most surprising thing? How few people see what’s coming.

What This Means for You

If you’re a founder: Build for a world where AI handles 90% of what your employees do today. The companies that figure out human-AI collaboration first will dominate the 2030s. Don’t hire for tasks. Hire for judgment, taste, and the ability to direct AI systems.

If you’re an investor: Productivity gains are compounding faster than any model shows. Coding went from 5% to 20% speedup in six months. In 18 months it could be 80%. That changes the terminal value of every software company on the planet. Reprice accordingly.

If you work in tech: The window to become exceptional at working with AI is closing fast. In two years, this skill goes from differentiator to table stakes. If you haven’t started, here’s the fastest on-ramp: Claude for SEO and content workflows or Claude for spreadsheets and data. Start now. Not next quarter. Now.

If you’re in any other industry: AI is coming for knowledge work the way machines came for manual labor. But faster. Much faster. The people who learn to work alongside these systems will thrive. The people who ignore the exponential won’t see it until it’s past them.

The capability curve is approaching human-level intelligence.

The economic curve is just getting started.

This is happening faster than most people expect.

Full Podcast: Dario Amodei — “We are near the end of the exponential”

If this breakdown saved you 3 hours, share it with one founder or investor who needs to see it. They'll thank you later.

The reality is they lost the narrative on agentic once Clawbot dropped, so now they are back to AGI. Let’s be real, AI is predictive text on steroids, that’s it. I like Anthropic and Claude as a product, but all this AGI talk is marketing to validate their valuations.

I find it wild how “near the end of the exponential” sounds like things slowing down, when in practice your breakdown makes it feel more like standing at the base of a vertical wall that suddenly appeared in front of the whole economy.

The two-curve framing (capabilities vs. diffusion) is especially clarifying; it explains why people’s lived experience still feels incremental even as lab demos cross thresholds like end-to-end software engineering in 1–2 years.

What sticks with me most is the idea that the real bottleneck now is not raw intelligence but organizational judgment and culture: who learns to direct a “country of geniuses in a data center” without blowing their own feet off.