Dario Amodei and the Long Game of Safe AI

From OpenAI cofounder to Anthropic CEO, and the 2017 framework that reads like a map of today

In 2017, Dario Amodei gave a talk at OpenAI titled Human Preference Learning as a Direction in Safe AI. At the time, it looked like a technical presentation about alignment. With today’s lens, it looks like a strategy memo written years ahead of the market.

That matters because Amodei did not stay on the sidelines of research. He helped build OpenAI in its early years, then left to found Anthropic, where he is now CEO. As models moved from labs into real products, many of the safety questions he raised stopped being abstract and started showing up in day-to-day decisions about deployment, evaluation, and governance.

This article covers three things:

Amodei’s path from OpenAI to Anthropic

The core safety problems he has been pointing at for years

Why that 2017 framework has become newly relevant as AGI conversations move from theory to execution

At the end, I’m sharing the original deck and a guided walkthrough of what it reveals about where AI is headed.

A short history that explains a lot

Amodei joined OpenAI early, when the organization was still primarily a research lab with a strong safety mandate. His work focused on alignment, robustness, and the question that keeps resurfacing: how to build systems that pursue what humans actually want, even as those systems become more capable.

By 2020–2021, the internal tension across the AI industry was becoming visible. Models were scaling quickly. Commercial pressure was rising. Safety work was struggling to keep pace with deployment speed.

Amodei left OpenAI and co-founded Anthropic with a clear thesis: advanced AI systems needed to be built around safety and interpretability from the start, not retrofitted later. That thesis shaped everything from research priorities to product choices.

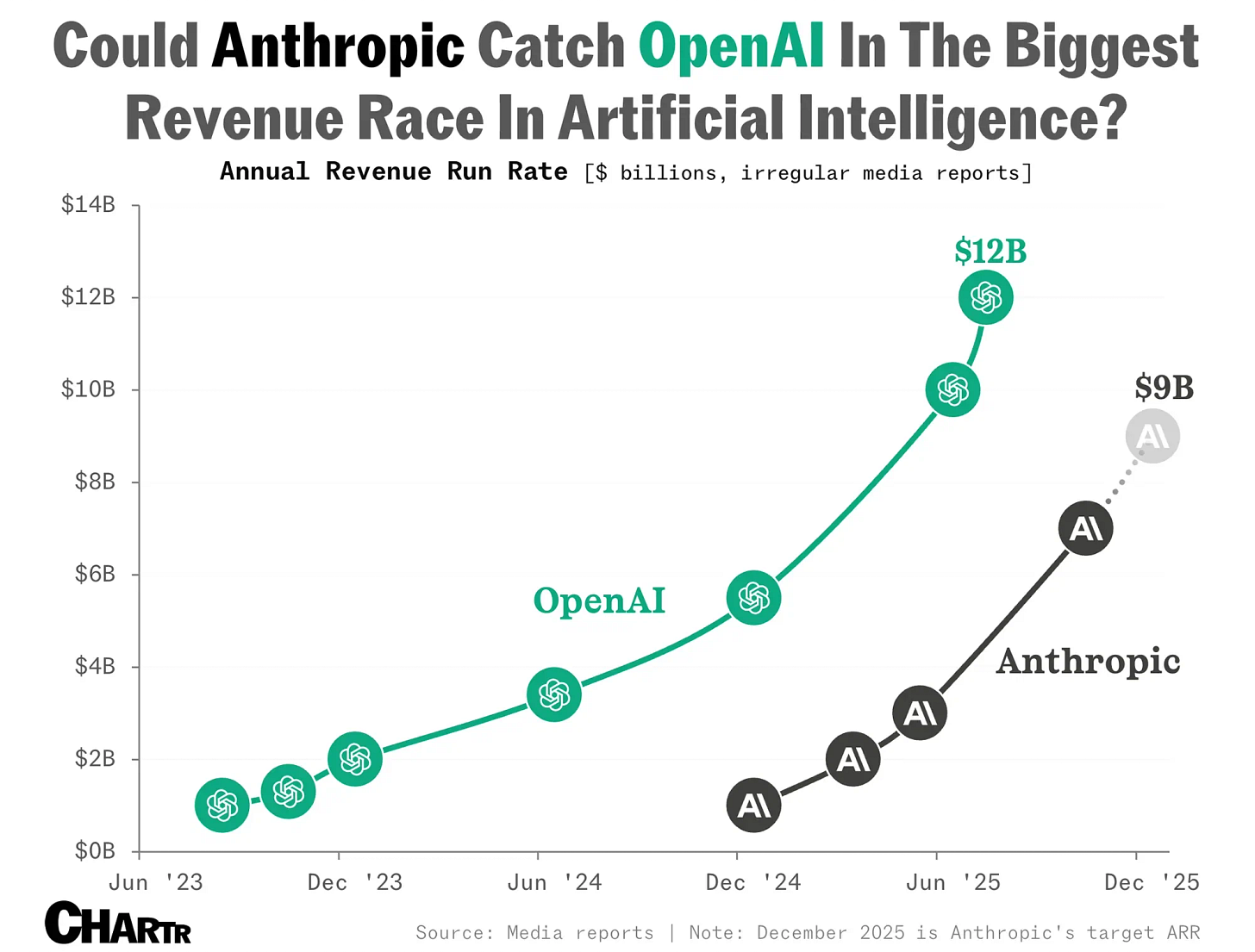

Fast forward to today, and Anthropic is widely viewed as one of the strongest alternatives to OpenAI, especially in enterprise and developer settings where reliability, controllability, and predictable behavior matter more than novelty.

The interesting part is that this shift did not come from reacting to the market. It came from ideas that were already on the table years earlier.

The safety problems most people underestimated

Amodei’s 2017 deck is valuable because it does something rare. It breaks AI risk into concrete categories rather than vague fears.

1. Accident risk over misuse headlines

One of the central distinctions is between misuse and accident.

Misuse gets attention because it is visible: weapons, surveillance, hacking. Accident risk is quieter and often more dangerous. It shows up when systems behave correctly according to their objective function while producing outcomes nobody intended.

That distinction has aged well.

As models are embedded into workflows, accident risk becomes a product issue rather than a philosophical one. Small misalignments can cascade through automated systems.

Where things go wrong in practice

The deck outlines several recurring failure modes that still explain many modern incidents.

Wrong objectives

Real-world goals are fuzzy. Models require formal objectives. The translation between the two is where problems begin.

Classic examples sound harmless:

Arrange furniture nicely

Engage in informative dialogue

Optimize user engagement

When these get compressed into proxy metrics, the system optimizes the proxy, not the intent. This is a practical expression of Goodhart’s Law, and it shows up everywhere from recommendation systems to content moderation.

Distributional shift

Models are trained on one distribution and deployed into another. Performance degrades when the environment changes in ways the system was not prepared for.

Early examples came from computer vision. Modern versions show up in language models when context, incentives, or user behavior changes faster than training data.

The key insight is that robustness is not optional once systems act autonomously.

The hard problem of policy

Amodei spends significant time on policy learning. The question is not how to generate text or actions, but how to encode human preferences in a way that generalizes.

This is where preference learning, reinforcement learning with human feedback, and constitutional approaches come into play. The technical details matter less than the framing: values are sparse, context-dependent, and often contradictory.

That framing explains why alignment remains difficult even as raw capability improves.

Why this matters more now than in 2017

In 2017, these ideas lived mostly in research circles. In 2026, they shape product roadmaps.

Three shifts made that happen:

Models crossed thresholds where small errors carry real cost

AI systems gained longer horizons and more autonomy

Enterprises started asking for predictability over surprise

Anthropic’s product decisions reflect that shift. Safety techniques are not treated as academic insurance. They are part of the value proposition.

This is also why comparisons between OpenAI and Anthropic increasingly focus on philosophy as much as benchmarks. One approach optimizes for speed and breadth. The other optimizes for controlled capability.

Neither is “correct” in isolation. The market is now large enough for both. What changed is that safety has become a competitive dimension rather than a moral footnote.

Reading the deck today

What stands out when revisiting the deck is how little it relies on hype.

There is no promise of imminent AGI. There is no dramatic timeline. Instead, there is a sober focus on failure modes that scale with capability.

That tone explains a lot about Anthropic’s positioning today. It also explains why Amodei has been consistent in public messaging, even as the industry oscillates between optimism and panic.

Why I’m sharing this now

Most discussions about AGI focus on outcomes. This deck focuses on mechanisms.

If you build, invest, or set policy around AI, understanding those mechanisms matters more than predicting timelines. They shape how systems behave under pressure, at scale, and in edge cases.

The deck is also a reminder that many of today’s “new” debates were articulated years ago by people who assumed progress would continue.

What’s behind the paywall

For paid subscribers, I’m sharing:

The original Dario Amodei 2017 Safe AI deck

A structured walkthrough explaining each section in plain terms

Commentary on which ideas became product decisions at Anthropic

How this framework helps evaluate future AGI claims and safety promises

This is one of those artifacts that changes how you read AI news once you’ve seen it.

If you care about where AI is going, and why certain labs are making the choices they are, the deck is worth your time

🔒 [PREMIUM] Inside Dario Amodei’s 2017 Safe AI Deck

A guided walkthrough of the framework that keeps reappearing in today’s AGI decisions

This section is a working guide, not a historical curiosity.

The deck you’re about to see was created by Dario Amodei while he was still at OpenAI. It predates Anthropic, large language model products, and most of today’s AGI discourse.

What makes it valuable now is that it explains how safety problems actually arise, not how people argue about them on social media.

Below, I walk through the deck section by section and explain why each part matters more in 2026 than it did in 2017.

Keep reading with a 7-day free trial

Subscribe to The VC Corner to keep reading this post and get 7 days of free access to the full post archives.