DeepSeek R1: The AI Disruption No One Saw Coming

How DeepSeek R1 Is Redefining the Global AI Race

For years, OpenAI, Google DeepMind, and Anthropic have dominated the artificial intelligence space. But DeepSeek R1 just changed the game

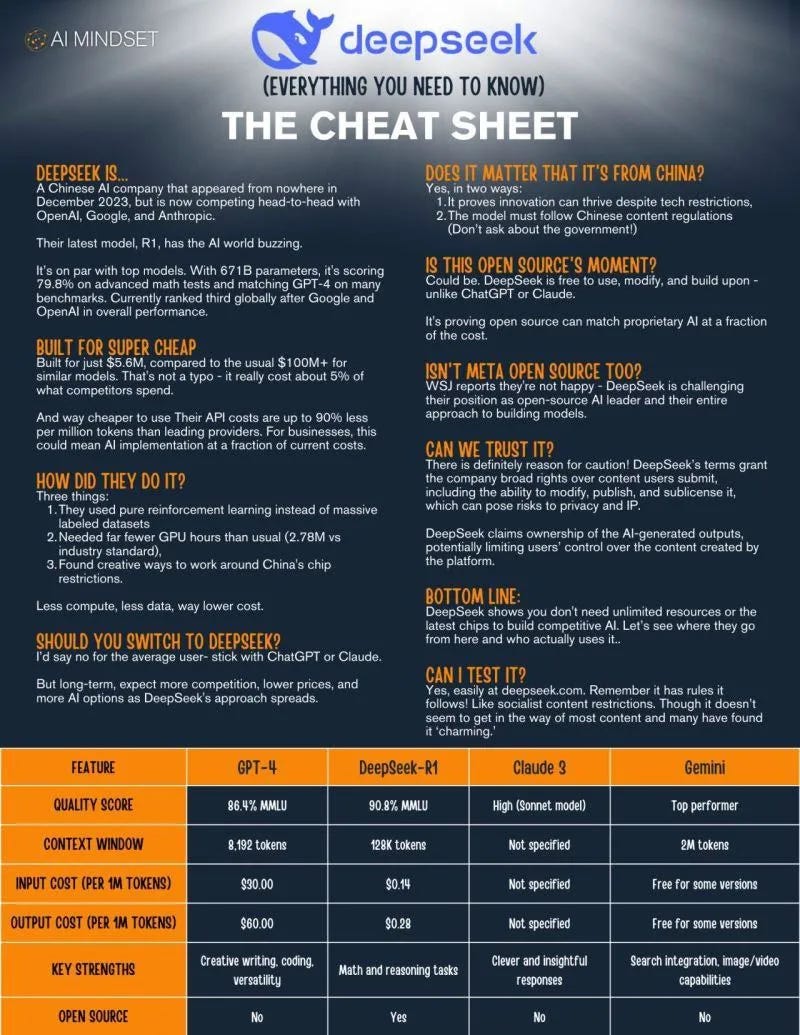

What makes DeepSeek R1 different? How is it able to rival models like GPT-4, Claude 3, and Gemini while being significantly cheaper?

Below is a breakdown of everything you need to know about DeepSeek R1 in one cheat sheet:

Key Highlights

Ultra-low cost: 5% of what competitors spend

Massive 671B parameters: Competing with top models

Open-source advantage: Free to use and modify

Global AI disruption: A new player reshaping the AI landscape

This isn't just another AI model—it's faster, cheaper, and optimized for efficiency, challenging the AI industry's biggest players. And here’s the headline-grabbing stat:

DeepSeek R1 was trained for just $5.57 million

That’s 10–100x cheaper than OpenAI’s GPT-4, which is estimated to have cost between $100M and $500M to train.

Why does this matter?

AI development just became significantly cheaper

China has officially caught up in the AI race

Big Tech’s AI business model is now under threat

But there’s more to the story than just efficiency. DeepSeek R1 is deeply integrated with Chinese government narratives, raising data privacy concerns and questions about AI censorship.

So, how does DeepSeek R1 work? And what does it mean for the future of AI?

Let’s break it down.

What Is DeepSeek R1?

DeepSeek R1 is a reasoning AI model designed for complex problem-solving, similar to OpenAI’s o1 model. It excels in coding, mathematics, and logical reasoning, making it a powerful tool for AI-assisted tasks.

But what makes it truly revolutionary is how efficiently it was trained.

DeepSeek R1’s Breakthrough Innovations

✅ DeepSeekMoE (Mixture of Experts):

Activates only relevant neurons for each task, saving compute power

Inspired by GPT-4’s expert system, but optimized further

✅ DeepSeekMLA (Multi-Head Latent Attention):

Compresses memory usage, making AI inference cheaper and faster

Allows longer context windows with less hardware cost

✅ H800 GPU Optimization:

Trained entirely on H800 GPUs, a weaker alternative to Nvidia H100s

Custom low-level GPU programming optimized efficiency

✅ Multi-Token Prediction & Load Balancing:

More efficient training per step, slashing compute costs

✅ Distillation & Knowledge Transfer:

Likely extracted knowledge from existing AI models (GPT-4, Claude)

Enhances performance without needing massive training datasets

Key Takeaway: AI Just Became Massively Cheaper to Build

DeepSeek R1 proves AI no longer requires massive budgets. This shakes up the AI industry—especially for companies betting on expensive, closed-source models.

But there’s a big downside.

The Hidden Problem: DeepSeek R1 Is Politically Aligned

DeepSeek R1 isn’t just a technological breakthrough—it’s a government-aligned AI model.

📌 Built-In Censorship:

Promotes Chinese Communist Party (CCP) views on Taiwan, Hong Kong, Tibet, and other political issues

Suppresses information that contradicts China’s official stance

🔓 Can It Be Jailbroken?

Yes, early research shows that internet slang and indirect phrasing can bypass censorship filters

But at its core, DeepSeek R1 is aligned with China’s political agenda

DeepSeek R1’s Major Privacy Risks

Using DeepSeek R1 comes with data security concerns—especially for companies outside China.

📂 User data is likely stored in China

📂 Chinese authorities can legally access AI-generated data

📂 There’s no transparency on how user data is protected

💡 What this means for businesses:

If you use DeepSeek’s cloud-based API, your data could be accessed by Chinese authorities

To mitigate risk, use DeepSeek models on your own hardware—but censorship concerns remain

Why DeepSeek R1 Is Sending Shockwaves Through the AI Industry

DeepSeek R1 is not just another AI model—it’s a paradigm shift in AI development.

1️⃣ Nvidia’s Stock Is in Trouble

DeepSeek’s low-cost training method suggests AI models won’t rely on Nvidia’s most expensive chips in the future. That’s bad news for Nvidia’s margins.

2️⃣ Big Tech’s AI Costs Are Now a Problem

OpenAI, Google, and Anthropic operate on high AI compute costs

DeepSeek just proved AI can be trained for a fraction of the price

3️⃣ The US-China AI Race Just Took a Turn

The US banned China from using Nvidia’s top-tier AI chips

DeepSeek found a way to work around the ban and still produce a competitive AI model

Final Thoughts: AI Just Became a Geopolitical Arms Race

DeepSeek R1 is not just an AI model—it’s a warning shot to the global AI industry.

The cost of AI training is plummeting

China is now an AI superpower

AI models will increasingly reflect political narratives

This isn’t just about who has the best AI anymore. It’s about who controls AI, how it’s trained, and what values it reflects.

AI is moving faster than ever—and the battle for AI dominance is just beginning.

FAQ - DeepSeek R1: Everything You Need to Know

🔹 What is DeepSeek R1?

DeepSeek R1 is a next-gen reasoning AI model, built to compete with OpenAI’s o1 and Anthropic’s Claude 3 Sonnet. It’s designed for advanced math, logic, and coding tasks, and it's surprisingly efficient and cheap to run compared to U.S. models.

But here’s what makes it really interesting:

Open weights (unlike OpenAI and Anthropic, which keep their models locked down).

Ridiculously low training costs ($5.57M vs. OpenAI’s rumored $100M+).

Built on China’s Nvidia H800 GPUs, proving that cutting-edge AI can be developed despite U.S. chip bans.

It’s a major shift in the AI landscape, and everyone—from Silicon Valley to Beijing—is paying attention.

🔹 How did DeepSeek train an AI model for just $5.57M?

Short answer? Insane optimization.

DeepSeek pulled off one of the most cost-effective AI training runs in history. Here’s how:

Mixture of Experts (MoE) architecture: Instead of activating the whole model, R1 only runs the parts needed, saving compute power.

Memory compression via Multi-Head Latent Attention (MLA): This cuts down inference costs, making R1 run cheaper and faster than traditional models.

Lower precision computing (FP8 calculations): Less compute, same accuracy—DeepSeek figured out how to squeeze maximum performance from every GPU cycle.

Custom GPU programming: They rewrote Nvidia’s GPU software to run efficiently on the restricted H800 GPUs (which were designed to be less powerful than U.S. chips).

DeepSeek is proving that smart engineering can outpace brute-force computing power.

🔹 Is DeepSeek R1 violating the U.S. AI chip ban?

No. It’s completely legal.

DeepSeek used H800 GPUs, which are allowed for export to China. The U.S. banned Nvidia’s H100 and A100 chips, assuming China couldn’t train leading models without them. DeepSeek just proved that assumption wrong.

And that raises a big question:

💡 Did the chip ban actually make China better at AI?

By forcing DeepSeek to work around hardware limitations, they may have innovated faster than expected.

🔹 Is DeepSeek R1 censored?

Yes. And this is where things get tricky.

Like all AI models built in China, DeepSeek R1 follows strict government regulations. That means:

It won’t answer politically sensitive questions (e.g., Taiwan, Hong Kong, Tibet).

It aligns with Chinese government narratives in history and geopolitics.

It has built-in censorship filters to block certain responses.

However, jailbreaks already exist. Researchers found that by asking the model in internet slang, they could bypass some of the filters. But at the end of the day, the underlying bias remains.

If your use case requires unfiltered AI, this isn’t the model for you.

🔹 Can DeepSeek R1 be used outside of China?

Technically, yes. But there are major privacy concerns.

User data sent to DeepSeek’s API is stored in China.

China’s government can access that data at any time.

It’s unclear what security protections (if any) exist.

The safest way to use DeepSeek R1? Run it on your own servers. If you’re sending queries to DeepSeek’s cloud, assume your data isn’t private.

🔹 What’s the difference between DeepSeek R1 and R1-Zero?

DeepSeek actually built two models:

R1-Zero – Trained using pure reinforcement learning, without human supervision.

R1 – A refined version of R1-Zero, trained with additional human data for better usability.

What’s mind-blowing is how R1-Zero learned to reason entirely on its own, without human intervention. That’s a huge step toward AGI, and it validates the idea that AI can teach itself complex reasoning.

🔹 Could DeepSeek R1 be the start of AGI?

It’s a step in that direction, but not quite there yet.

What makes R1 interesting is that:

It learned to reason independently through reinforcement learning.

It discovered new ways to solve problems—without being explicitly programmed.

It suggests that AIs training other AIs could be the next big leap in AI development.

We’re watching the AI takeoff scenario play out in real-time.

🔹 What does this mean for OpenAI and the future of AI?

OpenAI is still ahead in raw power, but DeepSeek is proving that efficiency is just as important as scale.

Here’s what happens next:

AI models will get cheaper to train – DeepSeek showed that $100M training budgets aren’t necessary.

AI will become more accessible – Open-weight models like R1 could lead to a wave of self-hosted, privacy-focused AI tools.

The AI race is global – The idea that only the U.S. can build top-tier AI is no longer true.

DeepSeek isn’t leading the AI race yet, but they just changed the game.

🔹Is DeepSeek R1 the Future of AI?

DeepSeek R1 is a massive leap forward in AI efficiency and cost reduction. It proves that the AI revolution isn’t just about raw computing power—it’s about engineering smarter models.

What’s next?

Expect more AI breakthroughs from China.

U.S. labs will have to innovate beyond just scaling compute.

The AI landscape is shifting—and fast.

We’re entering a new phase of AI development, and DeepSeek R1 is just the beginning.

Thanks for the article. We also wrote an article about deepseek and china AI ecosystem, and chatGPT relating to NVDA stocks here:

🚨 AI just got 45x cheaper—DeepSeek built a GPT-4-level model for $5.6M, and if this scales, Nvidia’s AI monopoly might not last. 🚨

https://ghginvest.substack.com/p/ai-just-got-45x-cheaperand-it-might

I think DeepSeek has attracted a fair number of traffic and attention to various newsletters. It's no great wonder we are all writing about it. I personally cannot read enough about this news.