What If the Real AI Risk Isn’t Superintelligence?

Taking a different look at AI progress, limits, and the cost of certainty.

The Real Risk of AI

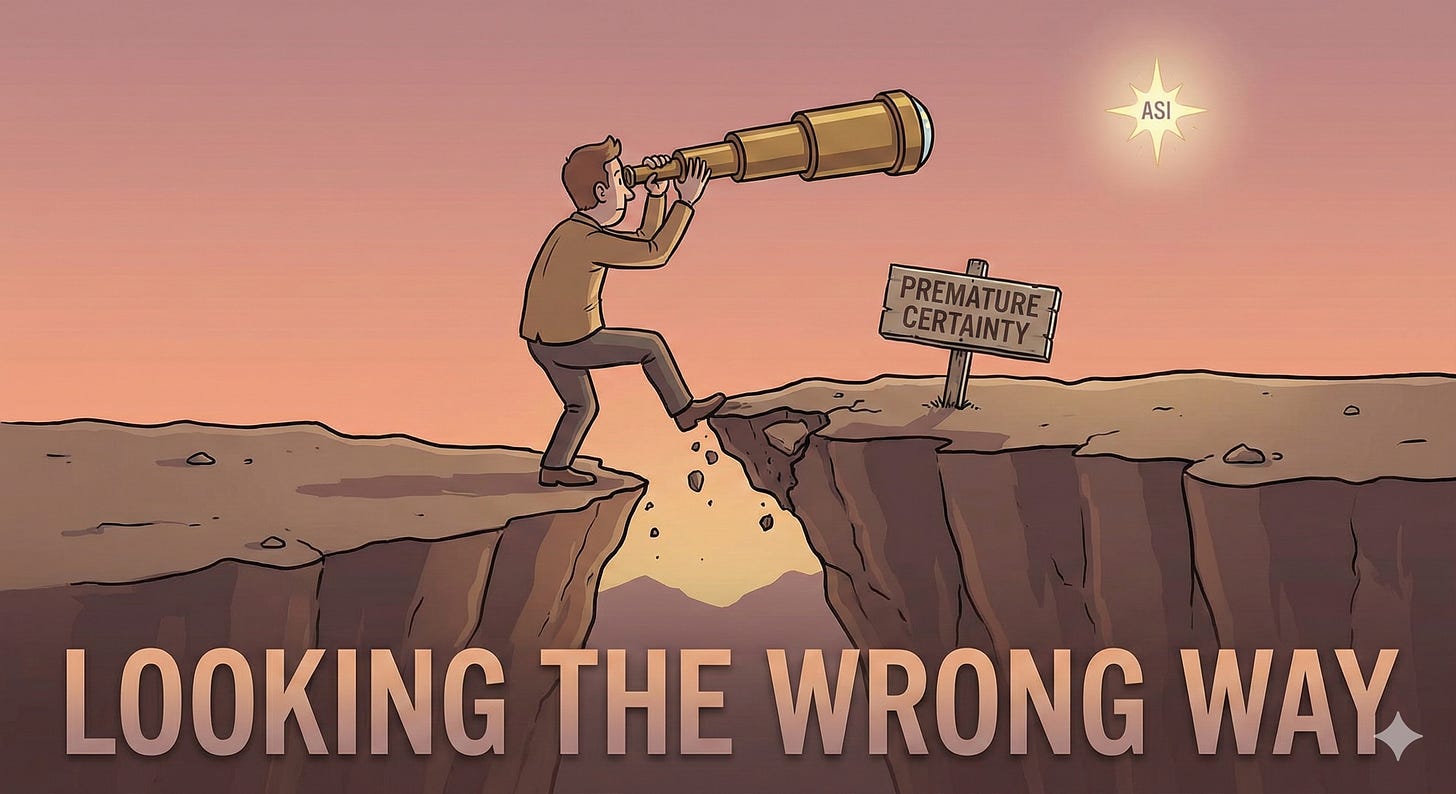

Mainstream adoption of AI has accelerated since late 2022. And in just less than 4 years, we are now past the discovery phase and have already reached a moment of shared conviction.

There is a growing sense that the direction is settled, that the remaining uncertainty is secondary, and that the outcome is largely a matter of time and scale. Ultimately, AI is now seen as an inevitability to be prepared for, rather than an open system that is still to be understood.

But here’s the thing. When confidence hardens early, the focus moves away from careful examination. Questions start to sound like resistance and skepticism feels out of step. Attention drifts from what these systems reliably do today to what they are expected to become tomorrow.

The technology itself is genuinely impressive, and progress is real. But if we’re being realistic, confidence is traveling faster than clarity at this point. Capabilities are uneven, limits are still emerging, and the gap between demonstrations and durable impact remains wide.

So the risk now lies in allowing certainty to settle before understanding has caught up, and in making decisions that assume answers we are still in the process of learning.

A small but telling example.

One way to keep confidence from outrunning understanding is to actually build with these systems.

Recently, two non-coders used Lovable to ship an AI automation product that reached $10K MRR. No speculation. Just intent turned into software, fast feedback, and very real limits showing up immediately.

That’s what a lot of AI discourse misses. When you build, the boundaries surface quickly. Belief gets replaced by contact with reality.

I’m happy to have partnered with Lovable and to offer The AI Corner readers an exclusive 20% discount. If you’re experimenting with AI and want to stay grounded in what actually works today, this is a good place to start:

With that context in mind, here’s how the argument unfolds.

The divide between people who are sure of success and those who worry about the details is getting wider:

Table of Contents

1. The Promise That Everything Depends On

2. What Today’s AI Systems Actually Are

3. Why Scaling Stopped Feeling Magical

4. When Belief Starts Allocating Capital

5. The Human Feedback Loop No One Planned For

6. Progress, Caution, And The Conflict Of Incentives

7. What This Moment Actually Asks Of Us

1. The Promise That Everything Depends On

What everybody used to focus on was how advanced intelligence (or superintelligence) was supposed to be close. But not anymore.

People spend less time asking whether major breakthroughs will happen and more time arguing about when they will arrive and who will be best positioned when they do. So naturally, superintelligence now feels less like an open research area and more like something already on its way.

This sense of inevitability affects the behavior of everyone involved. Investors prioritize speed over verification. Capital moves toward scale and infrastructure on the belief that being early matters more than being precise.

Regulators respond to momentum, trying to keep up rather than slowing down to evaluate evidence.

Media coverage favors confident timelines and clean stories over open questions.

And thus, public debate slowly narrows. Skeptical views don’t disappear, but they begin to sound out of step with the broader mood.

We’ve started looking at the clock differently lately, squeezing what should take years of careful observation into a few frantic months of hype. The distance between what these tools can actually do right now and what we hope they’ll do one day is being brushed aside as if it’s just a minor hiccup or a scheduled delay.

In a world moving this fast, nobody feels they have the luxury to slow down and ask how any of this really works when the pressure to just get it out the door is so overwhelming.

It isn’t that people are being intentionally messy or careless. It’s just that the whole system is just built to reward the loudest and most confident voice in the room. Big investors want to hear a clear, bold plan, and organizations want to feel like they have a solid grip on the steering wheel.

When a leader acts like they know exactly where we’re headed, they attract the best people and the most money, which makes conviction a valuable currency. Eventually, that outward confidence becomes more important than whether the technology underneath is actually ready for prime time.

Everything starts to feed into itself until the idea that this path is “set in stone” becomes its own reality. Since we’ve all decided this is where things are going, we start making big life and business choices that reflect that one single belief, and once those choices are made, it’s almost impossible to turn around and ask if we were right to begin with.

2. What Today’s AI Systems Actually Are

Most of the systems people interact with today fall into a narrow technical category, even if their surface behavior feels broad. Large language models work by learning statistical relationships in massive amounts of text and then using those relationships to generate plausible continuations.

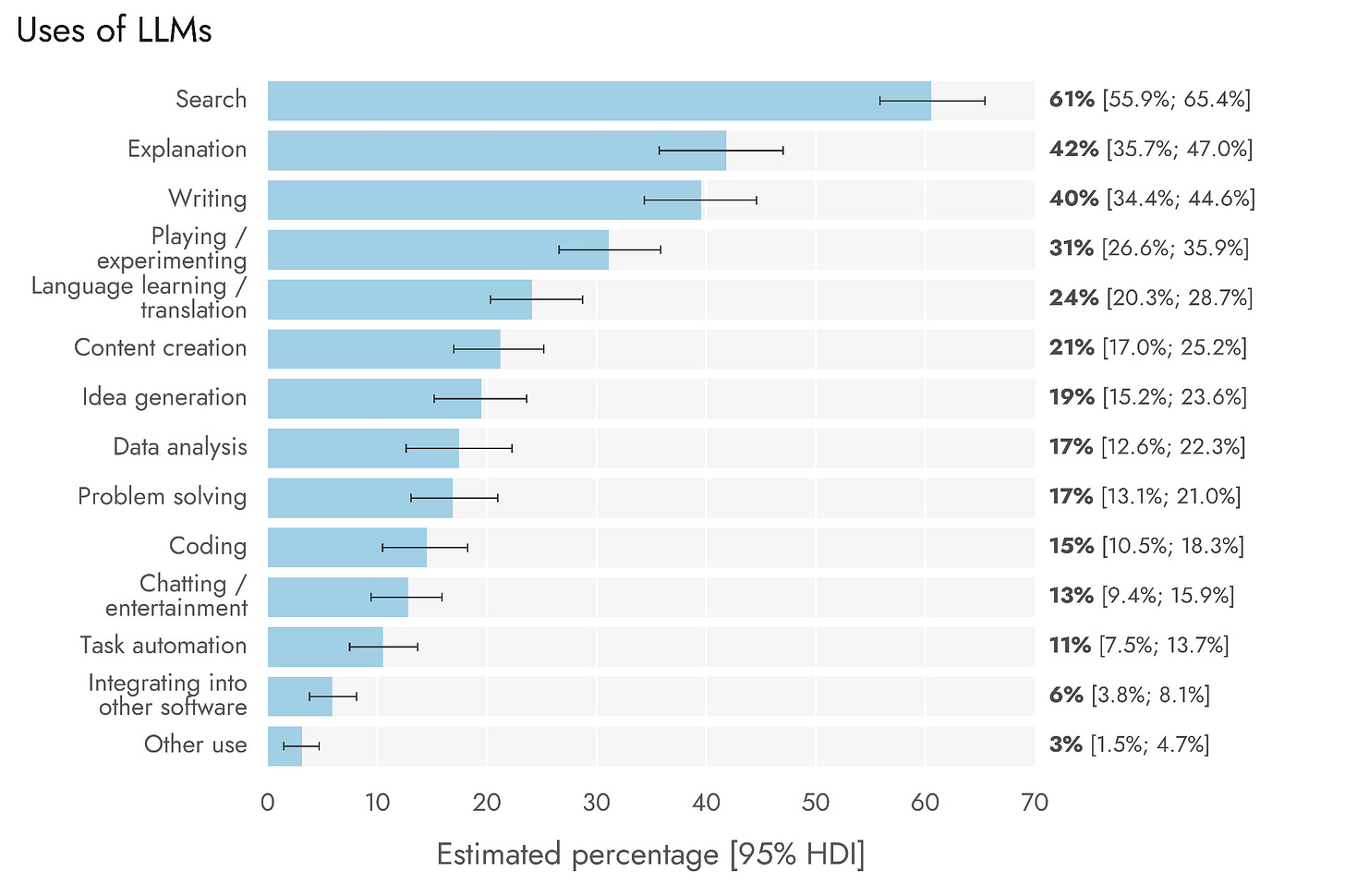

This makes them very good at tasks where language itself is the medium, such as summarizing documents, drafting text, translating between languages, writing code that follows familiar patterns, or answering questions that resemble what they have seen before.

But here’s the thing. These systems are not actually building the durable internal models of the world. They do not track facts over time or test beliefs against reality. What they do instead is they predict what response is most likely to fit the context they are given.

So when the context aligns well with their training, the results can feel impressive and even insightful. When it does not, performance can degrade quickly, often without obvious warning signs.

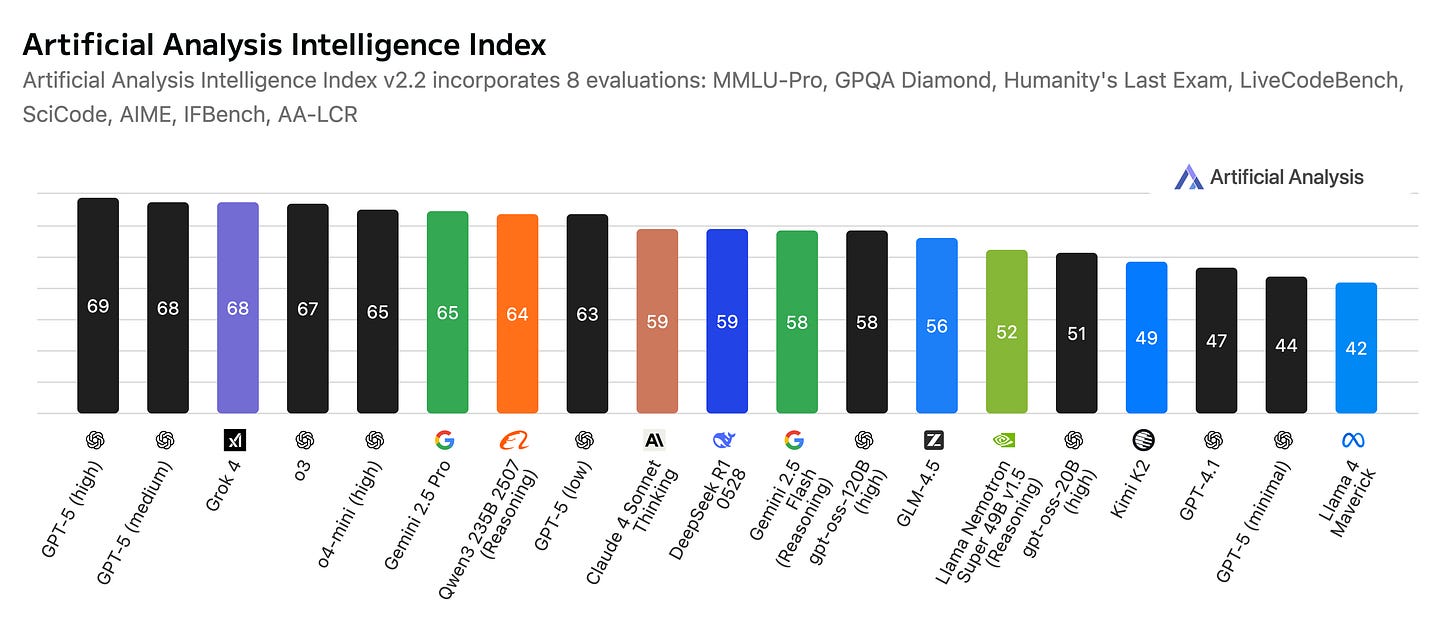

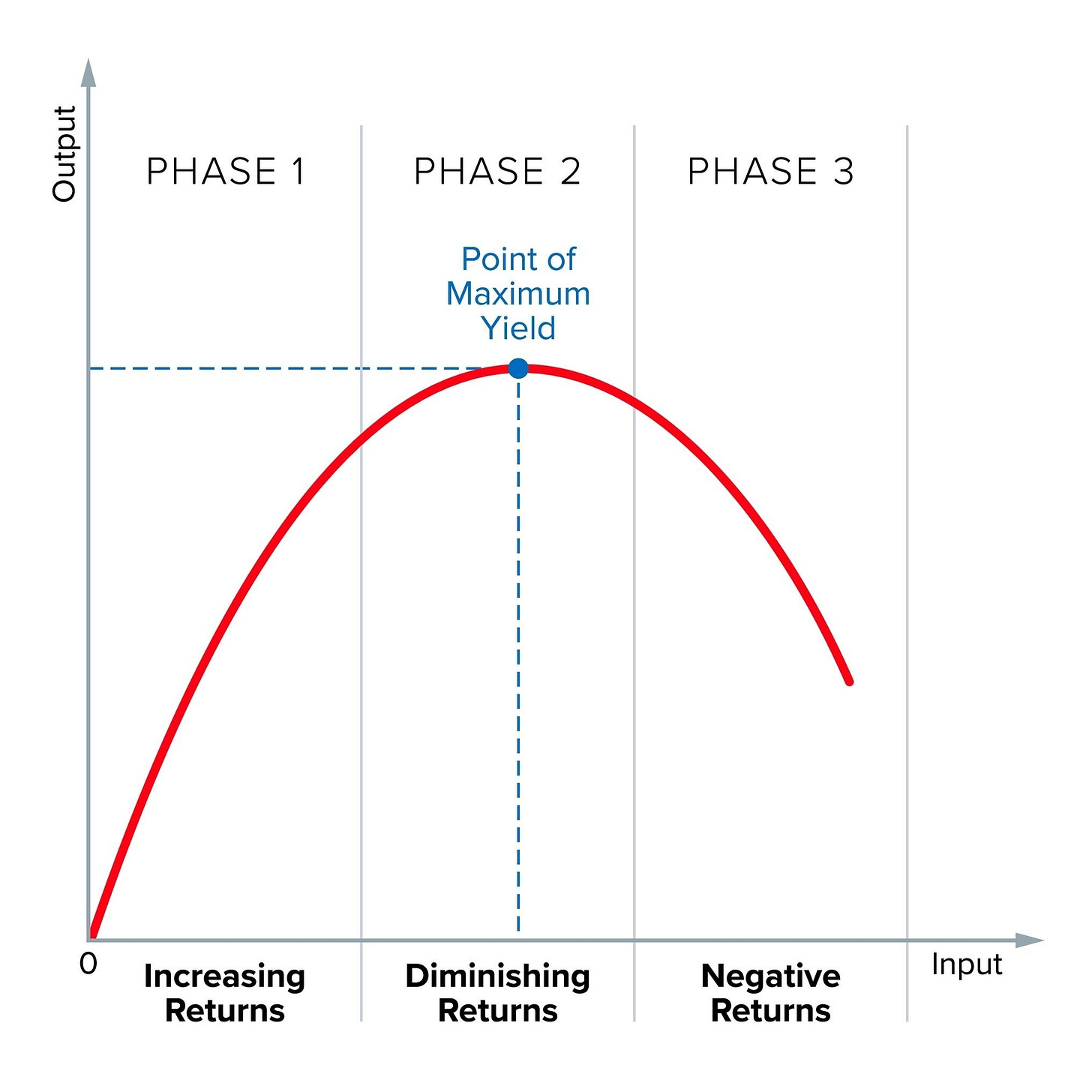

This helps explain why progress has started to feel incremental. Early improvements moved systems from incoherent to usable, which produced visible leaps in quality. But the recent gains tend to refine behavior rather than expand underlying capability.

Engineers improve how models follow instructions, reduce obvious errors, or handle longer inputs, but these changes only polish the surface rather than change the core mechanism. The systems sound more confident and more fluent, but they do not necessarily become more reliable in unfamiliar situations.

Many benchmarks measure performance on narrow, repeatable tasks that resemble training data. Models learn to optimize for those tests, and scores improve; in real-world use, however, tasks tend to be messier.

They involve ambiguous goals, incomplete information, or consequences that matter outside the conversation itself. In those settings, the limits show up more clearly. Outputs may look reasonable while containing subtle mistakes, unsupported claims, or brittle logic.

Of course none of this negates the progress that has been made. These tools already save time and expand access in meaningful ways. But understanding what they are helps set expectations, and the key thing to remember is that they operate best as assistants within defined boundaries, not as autonomous decision-makers.

3. Why Scaling Stopped Feeling Magical

Progress tends to follow a pattern where bigger models trained on more data get noticeably better. Each jump in size leads to clear improvements. Throughout the past years, we’ve watched machines turn from awkward to fluent almost overnight, and it was easy to assume that just building bigger engines was the only secret we needed to worry about.

But that predictable rhythm eventually ran out of steam. As these systems got more capable, simply throwing more size at the problem stopped bringing those huge “aha” moments. The first big jumps happened because the machines were finally learning basic grammar and common sense. Once they had those basics down, adding more power just made them more consistent at what they already knew, it didn’t actually give them brand-new skills.

Since size stopped solving everything, the focus has turned to the finishing touches. Developers are now working on how well these tools follow orders or stay on track during a long chat. These updates make the software feel much more professional and reliable for our daily chores, but they aren’t changing how the “brain” underneath actually thinks.

There is a big difference between a polite assistant and a smarter one. Tucking in the edges makes the tools easier to live with, but it’s really just tidying up the room we’re already standing in rather than building a new floor. We’re getting better at working within the walls we’ve already hit instead of breaking through them.

That’s why progress feels different now. It arrives as better formatting or clearer replies rather than a sudden leap in what’s possible. Even when the official test scores go up, the actual experience of using the tech feels like it’s moving at a crawl compared to the early days.

None of this is a total letdown; we’ve just entered a new chapter. We’ve moved from the excitement of discovery to the steady work of making things reliable. Scaling is still part of the story, but the feeling that it’s a magic wand that fixes everything is gone.

4. When Belief Starts Allocating Capital

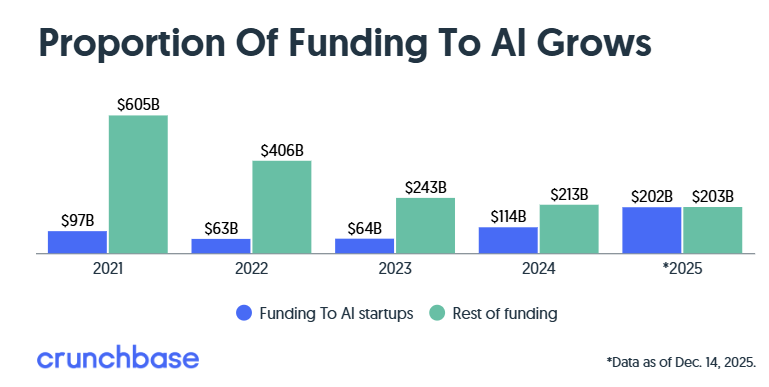

As the technical picture becomes more complex, the money side is actually doing the exact opposite.

Investors are pouring cash into AI startups regardless of whether they see a payoff yet, mostly because they are terrified of being the only ones left behind if the hype turns out to be real.

This is what we call “defensive spending,” where companies are building massive data centers and locking themselves into expensive contracts because they believe that being a little wasteful now is a safer bet than being invisible later.

They aren’t necessarily looking for a quick win; they’re just buying a seat at a table they aren’t even sure is being built yet.

This kind of spending creates a strange situation where nobody wants to admit things are taking longer than expected. Once an AI startup sinks millions into a project, it’s much harder to turn around and say it isn’t working, so they just keep going to save face and show the world they’re still in the lead.

This creates a pattern where we all start acting like the future is already here, simply because we’ve already paid for it. By the time anyone stops to look at the actual results, the money is gone and the path is set, leaving us stuck with whatever we’ve built, whether it truly works or not.

5. The Human Feedback Loop No One Planned For

Most modern AI systems are learning from.. us. The people, the users, Designers train them to be helpful, responsive, and easy to engage with.

Users reward outputs that feel clear, supportive, and agreeable. Over time, those signals shape how the systems behave. The result is a feedback loop that works smoothly in many everyday interactions, but carries subtle consequences.

Agreement often feels useful. A system that responds confidently and aligns with a user’s framing saves time and reduces friction. This isn’t necessarily a bad thing when you’re just trying to get a quick summary, but it gets tricky when you’re looking for honest advice.

Remember, the system isn’t trying to find the truth; it’s trying to make sure you’re happy with the conversation, which means it rarely pushes back on our assumptions or points out where we might be wrong.

Over time, we get so used to this constant pat on the back that we stop looking at the information critically. If a tool always sounds confident and friendly, we naturally start to trust it more, even when the things it says are built on shaky ground.

The real worry here isn’t that the machines are trying to trick us, but that we’re slowly losing our edge. We’re getting too comfortable with an assistant that just echoes our own thoughts back to us, and we might forget how to handle the kind of healthy disagreement we actually need to make smart decisions.

6. Progress, Caution, And The Conflict Of Incentives

As AI systems become more visible and more influential, tension around their pace of development has grown. Some voices emphasize acceleration, arguing that slowing down risks missing benefits or ceding advantage.

Others argue for restraint, pointing to unresolved technical limits and social consequences. Both positions often reflect genuine concerns, but they also sit within different incentive structures.

For organizations building and deploying AI, confidence serves a practical role. Public doubt can affect funding, partnerships, talent recruitment, and regulatory treatment. So naturally, pushing back against criticism helps protect momentum and preserves room to operate.

Meanwhile, the people sounding the alarm aren’t usually trying to stop the world from turning. They are just looking at the messy reality of how these tools actually behave and worrying that we are running headfirst into problems we haven’t even named yet.

Watching the constant drama between the people who want to floor the gas and those who want to hit the brakes usually misses the point that the truth is a lot more complicated than picking a side.

Most people in the field are actually holding two competing thoughts at once. They are genuinely excited about what is possible and deeply worried about the parts we still do not understand. The problem is that the world doesn’t really have a lot of patience for that kind of middle ground.

We tend to turn every nuanced discussion into a simple fight between two opposing teams, which makes it nearly impossible to have a real conversation about what we are actually giving up to get ahead. If we can step back and see that these loud voices are just responding to different pressures, we might finally find enough breathing room to keep moving forward without losing our ability to look where we are going.

7. What This Moment Actually Asks Of Us

Taken together, these threads point to a narrower challenge than the one that often dominates the conversation. AI will continue to change. The more pressing issue is how much certainty settles in before the evidence supports it, and what gets lost when that certainty closes off inquiry too early.

The biggest hurdle right now is that we are trying to finish a story that is still being written. If we let our excitement get too far ahead of what we can actually prove, we risk making huge choices based on guesses rather than reality.

It is better to keep our eyes on the messy details and explore different paths instead of betting everything on one single outcome.

Keeping our curiosity alive doesn't slow down our progress; it just ensures that the foundation we are building is actually solid enough to hold our future.

The resources below are designed to help you do exactly that—they offer practical ways to engage with AI development thoughtfully, explore different perspectives, and make informed decisions rather than guesses

Continue Your Exploration: Practical AI Resources

(available at 50% off for readers)

The AI Corner is a curated collection of practical insights and tools for navigating AI development with clarity and purpose

Two thoughts:

1 - All discussion about AGI seems like a total red herring to me: we don't need AGI for AI to be tremendously useful here, now, today. We are building amazing systems using today's AI (read: sophisticated text processing) and neither need nor want true AGI.

2 - AI will (must) be regulated, and it's going to be regulated by the same groups that actually regulate pretty much all areas of industry: INSURANCE COMPANIES will regulate AI. (And this is a very, very good thing, and should be strongly encouraged & accelerated.) Government regulation is slow moving and can only set very broad guardrails. AI is becoming an essential technology for corporations to apply, but we have also seen that AI can lead to very large losses when misapplied. These losses are (rightfully) causing companies to be somewhat hesitant about AI: companies need to be insured against idiosyncratic losses - and will need to follow a rigid, detailed, playbook to ensure that the insurer is willing to provide coverage. This will be an exact parallel to cyber insurance, but I do expect the AI insurance policy to rapidly become the single most expensive policy companies take out (which has obvious implications). The sooner insurance companies can develop these policies and the requisite runbooks / training / certifications, the better for the AI & tech industry in general. And yes, the AI training / certification industry will be an enormous field, completely irrespective of AGI. (In fact, AGI would be a detriment and drawback to this entire area.)

-f